Intelligent fusion of local stakeholders in remote environments

The pilot demonstrates how 5G's new functionalities enable high-quality content production with low latency, real-time video and advanced compression

The pilot project on the intelligent fusion of local actors in remote environments aims to demonstrate the opportunities that the new functionalities of 5G networks offer for producing high-quality content. This is achieved by ensuring a high bit rate in both transmission directions while maintaining controlled minimum latency. The scenario involves merging dynamic local content with a different background (i.e., a remote scenario).

Several technological challenges are considered in this pilot. First, the combination of both content streams to achieve a high-quality result requires minimal and controlled latency. Additionally, advanced video compression schemes with minimal delay are necessary, which involves new flow control schemes and encoder buffer control. While this challenge can only be addressed with the use of 5G networks, the pilot also incorporates a real-time free-viewpoint video subsystem for capturing visual content from the actors. This necessitates networks with high uplink capacity, paving the way toward the requirements of 6G networks.

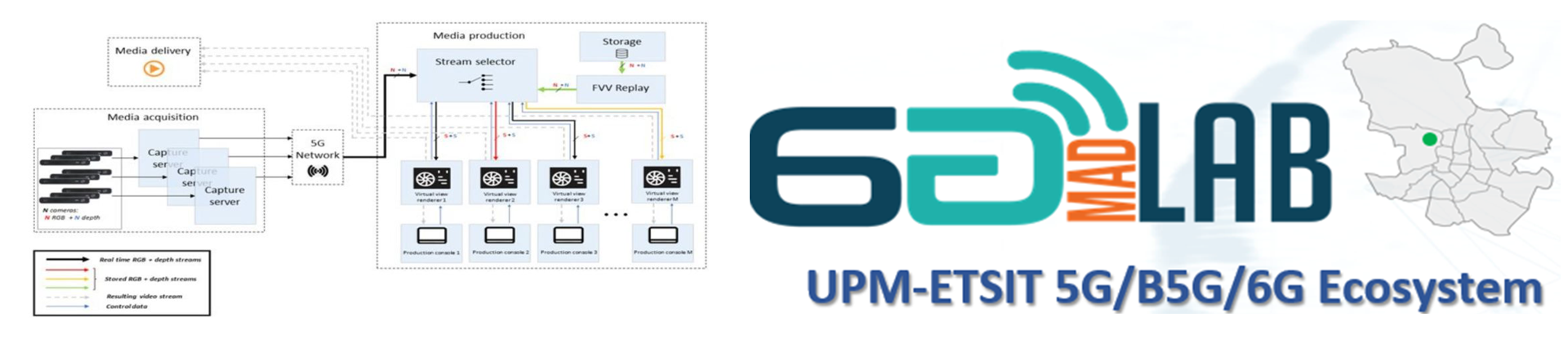

The activities considered start with the definition of the pilot scenario and its operational requirements. Thus, FVV Live is considered which is an end-to-end, low-latency, real-time, free-viewpoint video system that includes capture, transmission, synthesis on an edge server, and display and control on a mobile terminal. The acquisition subsystem includes: consumer stereo cameras, which do not require genlock, software synchronization over a distributed shared clock source via PTP (IEEE 1588-2002), and real-time segmentation and depth estimation to enable layered synthesis and bandwidth savings. The transmission subsystem includes: real-time encoding of all (required) camera streams in both color and depth, RTP transmission, lossless encoding of the depth information required in synthesis by adapting 4:2:0 video structures to obtain 12 bits per pixel. The synthesis subsystem uses only the cameras closest to the virtual viewpoint, employs a dense background model precomputed with Retinex + AKAZE + SfM + MVS and applies layered synthesis to integrate the above background model with a live foreground.

The pilot considers the integration of the FVV Live system into the communications infrastructure to be installed at the ETS Telecommunication Engineers and, in addition, incorporates the functionality of intelligent fusion of the first plan of the local actors with the background coming from a remote scenario. It is required to have up-link channels with sufficient capacity for several texture and depth streams from the cameras closest to the virtual viewpoint, ultra-reliable low latency communications and network virtualization features such as the capabilities offered by the MECs.

Preliminary Results